3 Ways Organizations Can Move Towards Health Equity

Key Takeaways

Growing interest in health equity is driving the creation of Chief Health Equity Officers and interest in new business practices utilizing SDoH and AI. Data analytics can be both a source of bias that creates health inequities, as well as a tool to address them. Creating a culture of health equity in data sciences for health will be instrumental in creating social impact from AI.

Curated data sets, synthetic data and open datasets can help address bias in data. Supported by the Office of the National Coordinator on Health IT (ONC) and Project Gravity, the work on SDoH can be supported by emerging research on synthetic data and open datasets, in order to address bias in models and support health equity efforts.

Participatory data methods have been utilized around the world to fill in the gaps in public health data. Data scientists, epidemiologists and experts in geospatial analytics have used participatory methods to generate new data while also increasing engagement in community health. These methods also frequently raise new research questions from the community and can build trust in healthcare organizations.

Introduction

In February 2022, I’ll be participating in a WEDI sponsored spotlight on health equity, where I’ll be exploring the links between AI, social determinants of health (SDoH), and health equity. This comes at a time when we have seen numerous payers and providers creating new roles –such as Chief Health Equity Officer – to address this issue. We are expecting to see a great deal more attention to this area in the future. However, we should not underestimate the challenges that organizations face.

So, what might an organization do to help build a strategic approach to making inroads on health equity?

1. Improve access to data on SDoH for analytics

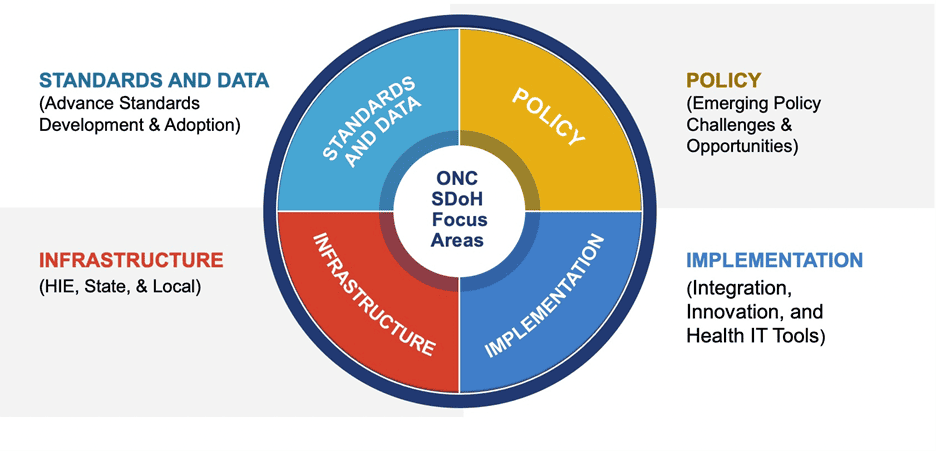

One of the biggest challenges in addressing health equity at the organizational level is the access to quality data on SDoH. This will create a grounded, accurate reality to base policies and interventions at the local level. In 2021 I wrote about the work at ONC to address SDoH data standards. The focus of this effort involves UCSDI Version 2 data elements for interoperable data exchange that now includes a number of SDoH elements.

HL7’s Gravity Project is an important initiative in this regard. The project addresses data across four clinical areas: screening, assessment and diagnosis, goal setting, and treatment/interventions. Some Health IT vendors, such as HSBlox, John Snow Labs, 3M and Linguamatics, are utilizing natural language processing (NLP) to surface SDoH data from unstructured data that constitute approximately 80% of medical records.

2. Remember the foundational elements: Do No Harm

Once developers have access to SDoH data, we need to think through the elements that could cause harm or obfuscate health equity issues, taking away from the more proactive measures that could deploy models to directly contribute to fairness and health equity.

The FDA continues to develop guidelines for the regulation of Software as a Medical Device (SAMD). Yet, many AI models and deployments may not come under the FDA purview, given that many applications do not involve clinical decision support or medical imaging. Many AI applications involve risk scores and population health efforts where bias could result in inadequate service provision and contribute to health disparities.

Bias auditing of data, as well as model performance, are essential to address the potential harms in models as we have seen in examples, such as in the Optum algorithm, that Obermayer et al. discovered in 2019.

The Optum example illustrates the need to critically examine both data sources (are they representative of the population?) as well as the variables of interest in the model. In this case, are claims data a reliable variable or proxy to understand the role of race, ethnicity, sexuality, etc. in the outcomes?

This issue extends beyond just claims data to any data set from which an AI algorithm is built that may contain hidden bias. In the IoT realm, does data from wearables or pulse oximeters require adjustments due to darker skin absorbing the wavelength of green LED in darker skin? Rigorous audits for bias in data and models must be foundational to improving health equity.

3. Note the Academy of Medicine and AI Lifecycle Framework

In 2020 the National Academy of Medicine (NAM) published a report on AI in Healthcare that included a lifecycle assessment framework. The framework offered by NAM with further elaboration from IBM provides a useful guide for developers to follow.

Figure 2: Ethical AI, Health Equity and Racial Justice Integrated Across the Lifecycle of AI Development (Source: National Academy of Medicine and Dankwa-Mullen et al. 2021)

The Lifecycle Approach adapted from NAM by IBM as illustrated above includes: needs assessments, workflows, defining target states, sufficient infrastructure to develop and sustain the AI system, implementation, monitoring and evaluating performance, and updating and/or replacing the system in a sustainable way. The authors of this framework note that equity and fairness constitute the Quintuple Aim.

Conclusion: Data Science for Health Equity

Potential Solutions: There are growing calls for the creation of curated datasets, open databases and open science in AI to improve the quality of models. Datasets could be created through use of federated learning-based collaborations across institutions as well as use of synthetic data. Synthea has been working with the Veterans Administration for exactly this kind of use case. Work in this area is still in the early days, but a small number of academic researchers are developing synthetic data methodologies to address bias. M-Sense offers synthetic data for research on migraine headaches, for example, while a number of other vendors such as Replica Analytics (acquired by Aetion), and Statice.ai have a number of synthetic data applications in healthcare.

Post-Hoc Evaluation: The issue of implementation and impact on patient outcomes is essential. Did the model/CDS interfere with patient-provider interactions and relationships? How well was the model integrated into clinical workflows and what impact on different populations and quality of care were experienced? Did the impact from the use of the model contribute to better outcomes for historically neglected populations? How could the above outcomes be improved to contribute to health equity?

One can also include the use of data science for original research that analyzes specific SDoH issues at the local level to better understand underlying causal mechanisms or gaps in care that drive poor outcomes. We covered the “vulnerability mapping” work that Jvion did in the early days of COVID that created a heat map of vulnerable communities where SDOH and environmental factors put communities at higher risk.

Propeller Health collects data on the location of users when they activate an asthma inhaler to fill in public health data gaps for asthma triggers. Air sensors and our understanding of the biological mechanisms for air pollutants and disease are growing daily. One could imagine a Waze for local air pollutants that advises on best routes for walking, commuting or exercise based on real-time air quality data.

We have many gaps in public health and population level data that can result in adverse consequences for low-income communities. In this area, healthcare organizations can partner with community-based organizations for participatory data collection. This will also contribute to better understanding of the role of data and analytics in communities. There are many successful examples of projects around the world to draw upon. Participatory exercises can also provide an opportunity to correct biases in existing data sets.

Finally, we will need to deepen our discussions and debates over the ethics of prediction. Are predictive algorithms unfairly predetermining futures of marginalized groups, based on problematic data, data collection, and models with biases? While much of the discussion on responsible AI has focused on the risks of AI, we should not discount the impact of establishing a culture of health equity in AI; we could use data science to explore the sources of health inequities and provide policy insights, as well as enact business practices with positive social impact.

0 Comments